“Many of us are trying to identify principles of cortical function and plasticity that generalize across cortical areas and across species,” said Picower Professor Mark Bear.

Bear is one of four Picower Institute professors who’ve made the visual system integral to their work. In their studies they have probed a pivotal region, the primary visual cortex (V1), and related areas to make major contributions to what the field knows about how neurons and neural circuits change and adapt with experience. That’s what Bear means by “plasticity,” which is an essential mechanism for learning and memory. Picower scientists have also employed vision in studies of how brain circuits and systems integrate new information into behavior and cognition. In many cases, what they have learned has had important impacts and implications for neurological and psychiatric diseases.

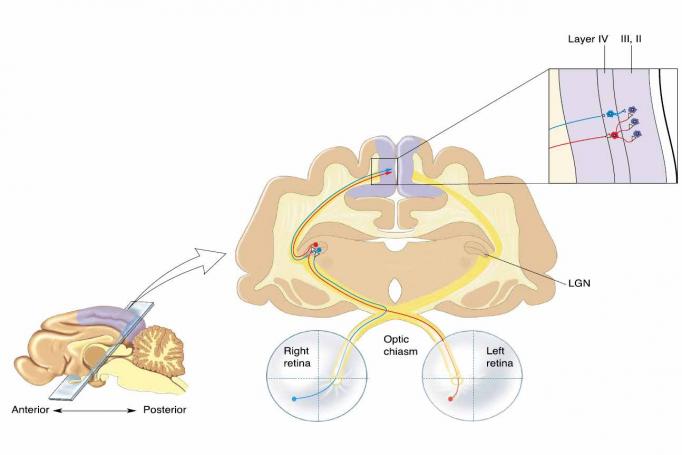

There are many reasons for the special role of the visual system in research on the broader brain. Elly Nedivi, William R. and Linda R. Young Professor, points out that the whole visual system – including the eyes and the optic nerves – is part of the brain, developmentally speaking. They emerge from the “neural tube,” the same embryonic structure that produces the brain.

“The eyes are the window into your brain,” Nedivi said. “It’s not just metaphorical.”

Vision is our main channel for acquiring experience. In primates, visual processing preoccupies about half the cerebral cortex’s total real estate.

Vision is also convenient. Picower Professor Earl Miller and Newton Professor of Neuroscience Mriganka Sur both note that in the lab visual stimulation can be widely varied yet finely controlled. Moreover, because the main inputs to the visual cortex are essentially direct from the eyes, the neural changes and activity observed there are unambiguously relatable to the experimental stimulation.

Such responses are easy to measure because the cortex is the brain’s surface, so it’s fully accessible for imaging or electrical inspection in conscious and responsive animals. Finally, decades of research on V1 in many species make it a well characterized system, making new findings readily interpretable.

Seeing the brain change

That history largely began with David Hubel and Torsten Wiesel who produced powerful demonstrations of sensory experience reshaping the cortex at Harvard in the 1960s and 1970s. If they closed one eye in kittens during the right developmental stage, the kittens lost sight in that eye even after it was reopened and even though the eye remained functional. This paradigm of “monocular deprivation” (MD) and the shift of V1 neurons from serving the deprived eye to the non-deprived eye (called “ocular dominance plasticity,” or ODP) inspired neuroscientists to study plasticity in the visual system.

Among them was Bear, who at Brown University in the 1980s collaborated with physicist Leon Cooper, who had co-developed a theory of plasticity. Neuroscientists knew that neural connections called synapses would strengthen with strong input but Cooper’s theory predicted they should also weaken with weak input. Moreover, the theory predicted that the threshold of whether input would be weakening or strengthening adjusted depending on overall neural activity – so lower activity lowered the threshold for strengthening vs. weakening.

Bear put the theory into practice. In the 1990s he and his lab members demonstrated the theorized synaptic weakening (called “long-term depression”) in V1 and in the hippocampus, a region known for memory. That indicated LTD was a plasticity rule throughout the brain. In 2004 in Neuron, Bear’s lab, which had moved to MIT, showed that LTD of V1 neurons serving the deprived eye helped explain the degradation of vision in mice subjected to MD. The study also noted that while lid closure caused MD, completely shutting down the retina with a dose of the chemical TTX did not. With no activity at all, visual cortex synapses serving the deprived eye did not undergo LTD.

MD models a common visual disorder called amblyopia in which a cataract or other eye problem during early childhood can lead to ODP, permanently degrading sight through the compromised eye. The typical treatment is to address the occlusion and then patch the good eye to force visual activity to remain with the weaker eye. But patch treatment isn’t fully effective and doesn’t work after about age 8. Bear and colleagues realized that Cooper’s theory and the effects of TTX might yield a better treatment: using TTX to completely, but only briefly, shut down both eyes wouldn’t cause LTD, but would lower the threshold for strengthening. When the TTX wore off, V1 synapses for both eyes would be primed for a radical restrengthening. In a study in the Proceedings of the National Academy of Sciences (PNAS) in 2016, Bear and collaborators showed exactly that in two different mammal species, even past their period of early development. “Rebooting” the visual system completely restored sight loss triggered by MD. The protocol is not yet clinically applicable, but Bear’s lab is building on the results to create one that is. The research, Bear says, shows how a fundamental understanding of plasticity can help rejuvenate neural circuits and activity.

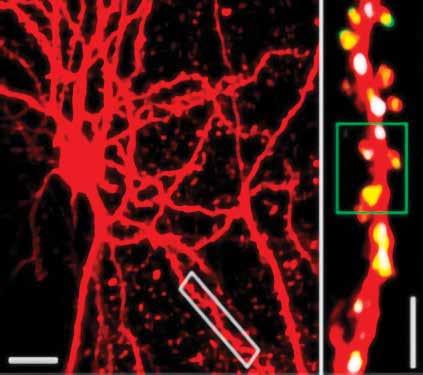

Nedivi’s lab has manipulated visual experience in many papers illuminating the fundamental nature of “structural” plasticity, in which synapses don’t merely become weaker or stronger but are actually born anew or die away. Last year in Cell Reports, for example, she demonstrated that the protein of a plasticity gene she has long studied, CPG15, represents experience to make a newly formed synapse permanent. Synapses come and go, but by manipulating the visual experience of mice and closely tracking individual synapses on V1 neurons, Nedivi’s team showed that when a new synapse participates in a circuit responding to experience, it expresses CPG15 and that’s what recruits another protein called PSD95 to cement it.

Meanwhile back in 2012, Nedivi’s keen interest in adult synaptic plasticity produced an important technical advance. Excitatory synapses were easy to track with “two-photon” microscope technology because they always appear on the conspicuous spines that protrude from dendrites. Inhibitory synapses, however, have no such morphological marker. Working with MIT engineer Peter So, Nedivi devised a way to add a second color to two-photon imaging to specifically label and track inhibitory synapses. In Neuron the team showed how inhibitory synapses respond to experience by imaging them in V1 as mice underwent visual deprivation. They found that the paring down of inhibitory synapses induced by MD was tightly coordinated with a rearrangement of excitatory synapses on V1 neurons, suggesting local communication between different synapse types.

Sur, too, has employed the visual system to make dramatic findings about plasticity on scales ranging from whole brain regions to individual synapses. In 2000, for instance his lab showed in Nature that in developing ferrets when he disrupted input from the ears to the auditory cortex, the brain repurposed the whole region to become an extension of the visual cortex.

Sur’s lab employed MD to discover novel mechanisms underlying weakening of deprived eye synapses and strengthening of non-deprived eye synapses. In several papers, including one with Bear’s lab in Nature Neuroscience in 2010, another in 2011, and others in the Journal of Neuroscience in 2014 and 2018, they showed the mechanisms include activity-regulated genes such as Arc, non-coding RNAs such as microRNAs, and even genes implicated in autism spectrum disorders.

Then, in Science in 2018, Sur’s team employed sophisticated visual manipulations in mice to identify a new rule of plasticity. Knowing that individual synapses enable neurons to become tuned to exact places within in a mouse’s visual field, his lab intervened to shift which synapse responded to stimulation in an exact place to see how the neuron would compensate. He found that as a new synapse strengthened to mark the shift, others nearby quickly weakened. Moreover, they showed that this rebalancing was mediated by the protein Arc. Evidence suggests that this compensatory mechanism is likely true well beyond V1, he said.

One of Sur’s other fundamental findings about the proteins of synaptic plasticity, based on investigations in V1, has led to a potential therapy for Rett syndrome, a severe autism-like disorder. In 2006 in Nature Neuroscience his team published a broad investigation of synaptic proteins with significantly different regulation among developing mice raised in the dark or with MD. A protein called IGF1 stood out. Artificially adding it prevented ODP. As they studied IGF1 more they realized that it promoted synaptic maturation in a way that was missing in Rett syndrome models. In successive papers, for instance in 2009 and 2014, they showed that the protein’s activity was repressed by the genetic mutation that causes Rett syndrome, and that giving Rett model mice doses of human IGF1 could help correct problems caused by the mutation not only in the brain, but elsewhere. One of their key assays was control mice. The findings have led to clinical trials of IGF1 for Rett syndrome patients.

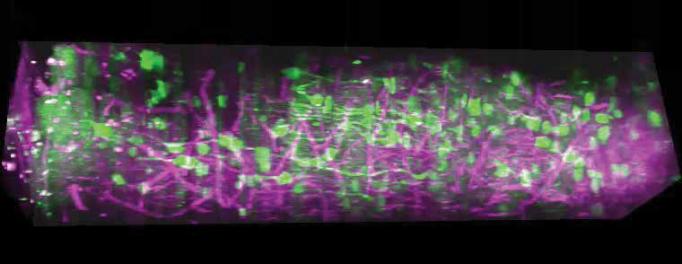

As with Nedivi, Sur’s forays into the visual system have motivated him to drive innovations in microscopy. Two-photon microscopes allow for looking about 0.4 millimeters into the cortex, deeper than traditional optical microscopes, but Sur has sought to observe activity in all six layers of V1, which in a mouse is a millimeter thick. With the technical expertise of So and postdoc Murat Yildirim, Sur’s lab advanced three-photon microscopy to the point in 2019 where they indeed imaged neural activity in a live, behaving mouse all the way through a column of V1.

Even before that, Sur's lab in Science in 2008 used the columnar organization of visual cortex neurons in V1 of ferrets to show that astrocytes, star-shaped non-neuronal cells, were linked with neurons and also had visual responses. They actively regulated blood flow within the cortex, a mechanism underlying fMRI imaging that relies on blood flow.

Putting vision to use

V1, of course, is not just a plasticity proving ground. It’s where the brain begins to make sense of what we see. For a long time, neuroscientists thought it might be hardwired to distill features like shapes and spatial orientations but Bear’s research has shown it can learn in ways with direct behavioral significance.

In a study in 2006 in Science his lab showed that visual cortex neurons learn to predict the timing of a reward. In experiments with mice they paired a flash of light with a later sip of water. At first the neurons only remained active during the flash, but after training they remained active from the time of the flash until the delivery of reward, as if predicting it would come. In another set of studies that continue to this day, his lab has documented that in mice shown the same stimulus repeatedly, V1 neurons show an increased response that allows the brain to characterize the stimulus as familiar, a form of visual recognition memory that may be lacking in some autism patients. In another series of experiments, Bear’s team has documented that visual cortex neurons learn whole sequences of stimuli, producing activity patterns attuned to their specific order and timing. The research showed they even anticipate missing members of the sequence.

While these findings have led Bear to joke that V1 may be all the brain needs, of course neuroscientists including Sur and Miller have deeply investigated how visual information flows into the rest of the cortex, which includes many other visual and vision-related areas, to influence behavior and cognition. In a pair of studies in 2016 and 2018, Sur’s lab traced neural activity among regions in which rodents had to decide whether to move based on visual stimulation (i.e. like hitting the gas upon seeing a green light). The studies implicated the posterior parietal cortex as the locus where visual input is married with decision-making calculations and output is sent to motor areas. His lab has many other studies underway of the interplay of brain regions in visually guided decision making.

In some of Miller’s studies of the large scale patterns of neural activity underlying higher-level cognition, for instance in 2018 in PNAS, he has tracked the information processing roles of regions stretching from the visual cortex at the back of the brain all the way to the prefrontal cortex at the front. As lab animals carried out a task that required them to categorize visual stimuli, Miller’s team tracked neurons in six regions. Their analysis revealed that even regions with mostly visual responsibilities helped encode information about categorization. They also found that as information moved from sensory to cognitive regions, the dimensionality of the neural activity was reduced. That means that sensory-focused neurons encoded many aspects of the stimuli, but more cognition-related neurons filtered out irrelevant aspects to fixate on fewer properties – the ones relevant to the task – an ability that some with autism sometimes struggle with.

But the prefrontal cortex doesn’t have to filter. In a seminal study in Nature in 2013, Miller and colleagues had also found that when animals were presented with a multifaceted visual discrimination task, prefrontal neurons showed the flexibility to consider as many dimensions of information as was needed, suggesting that neurons aren’t hardwired into just one circuit.

That’s not to say the brain doesn’t have its limits. In studies of how it handles visual information, Miller’s lab has found that individuals vary in their ability to process what they see on different sides of their visual field. They have also documented how the brain attempts to simultaneously hold and juggle multiple items of newly acquired sensory information in working memory. A series of studies has revealed that the brain employs specific frequencies of brain waves to hold, use and then discard information based on the requirements of the task at hand. High frequency gamma waves produced in superficial cortical layers encode the new sensory information based on task-informed guidance encoded in lower frequency beta waves from deeper layers. In a study in Cerebral Cortex in 2018, though, his lab showed that when the brain tries to store too much visual information, the synchrony provided by these brainwaves breaks down, explaining why working memory capacity is limited.

There are many ways to learn about the brain, but a productive path to insight is through sight.